Well, I might have crafted a little solution. I say the word MIGHT very loosely, becuase I don't have any log data to test it against, let alone 500,000 lines of log. I'm not even sure if the change I made in the js code by itself is enough to facilitate anything, or if more is needed to be done.

View attachment 96923

Close out of Trunking Recorder and Browse to the folder:

C:\Program Files\Trunking Recorder\Website\js

Right click on TrunkingRecorder.js and click properties, click security tab, highlight Users in the upper box, and click the checkmark box for Modify in the lower box, then click okay.

View attachment 96930

View attachment 96932

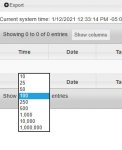

Now right click on TrunkingRecorder.js again and click edit, and scroll down and find this line and add more values like I have, the original copy should only go up to 500. Then click save and restart Trunking Recorder and go to the webpage for it, see if it works. I honestly don't know if this will work, and it MAY/PROBABLY could brind the browser or computer to its knees trying to parse, I have no idea. If its no good, just scale back the numbers or revert to just up to 500.

View attachment 96933